Self-Hosting Next.js

Separating Frontend and Backend

Running a Next.js application with a separate backend is not a simple task. Next is a fullstack application, and it's much easier when you can avoid mixing in another backend technology. Avoid shooting yourself in the foot.

If you're stubborn, and you feel the masochistic urge to still go this route— read on. It's what I did, and I feel a lot of trepidation over that decision after countless debugging nightmares. If I had to start over, I'd seriously consider going 100% Next or using a different frontend altogether.

Self-hosting adds further munition to the minefield of challenges when setting up a production Next application. In this page, I'll share my experience and the pitfalls of using Next without Vercel support (and its costs $$$) on a generic virtual private server (VPS).

Memory Requirements

The predecessor of this application was using PHP. Like the current application, it was Dockerized (though, using fewer containers). I never had issues with memory requirements. That was on a 2GB/1vCPU Digitalocean VPS for $12/mo.

The updated application replaces PHP with a Node frontend and a Python backend. It's also broken out into several (6 total) Docker containers. Some containers have minuscule memory requirements— less than 30MB in some cases. However, the frontend and backend containers regularly hover between 100-900MB depending on traffic.

While setting up the Next application on a VPS with similar specs, I was immediately stopped by the inability to even install my Node dependencies. The amount of memory needed to run npm install would crash out my frontend container. Through iterative testing, I bumped up the VPS memory and was able to get dependencies to install at 4GB of RAM.

Unfortunately, even with this relatively generous amount of memory, the Next production build would still fail. Running next build would snag and the container would get caught in a restart loop.

Debugging this issue was quite difficult. For a long time, I thought the build was crashing because it couldn't connect to fonts.googleapis.com. It was repeatedly killing the container right as it started to fetch Google fonts:

request to https://fonts.googleapis.com/css2?family=JetBrains+Mono:wght@100..800&display=swap failed, reason:

Retrying 1/3...As it turns out, this was just coincidence. The combined memory requirements of the containers (together with the operating system's normal memory usage) was still too great for a 4GB VPS!

Luckily, Digitalocean prices by the hour. I was able to run a final test with double the RAM— finally receiving a successful build on an 8GB Digitalocean "Droplet" (their proprietary name for a VPS). At $48/mo, however, this was not sustainable.

There was no way I could justify paying $576/year for a hobby site. I could barely justify it when it was $144/year! At this point, I was feeling quite disappointed. Then I discovered Hetzner .

Hetzner

After some research (Googling and Reddit), I found that Hetzner provides ARM-architecture VPSs with 8GB RAM for $7/mo! Not only was this a major upgrade from my existing Digitalocean server— with 4x the memory, I was also paying $5 less per month.

Migrating to Hetzner was the next step. Overall, the process was fairly painless. I still have a soft spot in my heart for Digitalocean because they are extremely developer friendly and have a wealth of documentation and resources that I still return to over and over. I don't regret starting with them as a novice Web developer.

Self-Hosting and fetch

While self-hosting with an Nginx Web server, the Node.js fetch implementation has been the bane of my existence. Async programming is not a simple thing. It can be very difficult to understand how a long chain of requests resolves.

To make matters worse, the undici package (which supplies the fetch function) has several outstanding and poorly documented issues. It's painful enough that I might recommend to someone reading this that they ditch it and use axios for any GET/POST requests.

504 Gateway Timeout with next/image

The first fetch-related issue I observed manifested in the blur mechanism implemented by the next/image component. The Next image component displays a low-quality image placeholder (LQIP) which is swapped out with the optimized image after a short time.

After doing a production build (next build and next start on the VPS), I was consistently getting a gateway timeout (504 status) on the optimized image. When loading a page with a lot of images, the LQIPs would be replaced on most images. However, some images would not load and the LQIP would be replaced with the image's alt text.

Strangely, the same broken images would usually remain broken on future reloads. However, every time the service was restarted, different images would experience the same problem. Interestingly, in the logs, these events would show up as a 499 HTTP status code followed by a 504 gateway timeout after about 30 seconds.

The 499 HTTP status code "indicates that a client closed a connection before the server could respond." This has generally meant that I have a race condition and the LQIP replacements were happening farther along a broken chain of promises.

Obviously this was not ideal. After a lot of digging through the Next.js issues (at the time, ~2.8k open issues), I finally came across a suggestion on StackOverflow

to set the Nginx configuration for proxy_ignore_client_abort to on:

proxy_ignore_client_abort on;The issue seemed to be that the client closed the connection before it was able to receive a response from the API— in my case, a Docker container with a RESTful service. After applying that configuration option and restarting Nginx + Next, all images seem to load as expected.

Undici (UND_ERR_SOCKET)

Another hard problem that I've run into while self-hosting Next.js is a random socket termination error :

TypeError: fetch failed

at Object.fetch (node:internal/deps/undici/undici:11576:11)

at async invokeRequest (/app/node_modules/next/dist/server/lib/server-ipc/invoke-request.js:17:12)

at async invokeRender (/app/node_modules/next/dist/server/lib/router-server.js:254:29)

at async handleRequest (/app/node_modules/next/dist/server/lib/router-server.js:447:24)

at async requestHandler (/app/node_modules/next/dist/server/lib/router-server.js:464:13)

at async Server.<anonymous> (/app/node_modules/next/dist/server/lib/start-server.js:117:13) {

cause: SocketError: other side closed

at Socket.onSocketEnd (/app/node_modules/next/dist/compiled/undici/index.js:1:63301)

at Socket.emit (node:events:526:35)

at endReadableNT (node:internal/streams/readable:1376:12)

at process.processTicksAndRejections (node:internal/process/task_queues:82:21) {

code: 'UND_ERR_SOCKET',

socket: {

localAddress: '::1',

localPort: 43952,

remoteAddress: undefined,

remotePort: undefined,

remoteFamily: undefined,

timeout: undefined,

bytesWritten: 999,

bytesRead: 542

}

}

}These errors only appear when rapidly reloading or navigating quickly between pages. It has been incredibly difficult to pinpoint the exact cause. I can say with some certainty that the issue always ties back to how data fetches are processed.

If an async fetch operation is unable to consume a response before the connection is closed, Next with throw up the dreaded Next WSOD (White Screen of Death)— along with a generic error message:

"Application error: a server-side exception has occurred (see the server logs for more information)."

If you inspect the browser console or your application logs, you may get a more descriptive error message. Still, the problem can be very elusive. This is why I can't give a definitive solution, but I have a strong suspicion that this issue always connects to a bad or broken response from a RESTful API endpoint.

To start debugging, take a closer look at any async data fetching operations and promises that could end up interrupted. Review your server logs to detect which requests weren't able to receive a 200 response. I also recommend restructuring all await fetch() calls to contain a try { ... } catch (error) { ... } statement around the response handling. This seems to handle fetch errors more gracefully.

Why Next.js?

At times it can be furiously frustrating to use Next.js. The change from pages to app router was dramatic and took a lot of refactoring. There are some GitHub issues, among the +2,5k open issues, that have gone unanswered by Vercel in 3 years or more.

Next.js is an open source software, but it's still under the umbrella of Vercel. I'm sure they're also making a hefty sum from their Next.js cloud deployment platform. In my experience, they move faster and break more things than Meta has with React.

So why the hell would a developer keep using Next?

1. React with batteries included

The hardest part about learning React, for me personally, was understanding how to configure my build tools and development environment. Next.js bundles these build and linting tools together with sane defaults.

Another common design pattern I'd find in Web development was the need for a simple file-based router mechanism to serve markdown. Next supports this out-of-the-box.

Understanding client- vs server-side code execution and Node. Next's "use client" flag might be confusing at first. For me, it helped build a better understanding of how clientside interactions in the browser differ from server-executed code. It also gave me a better understanding of React hooks like useEffect.

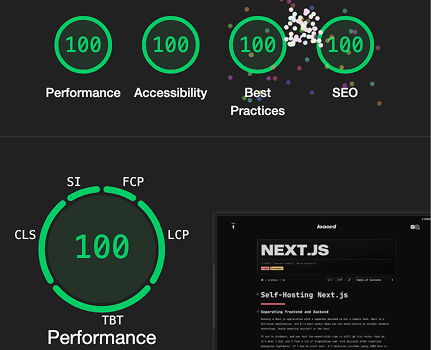

2. Impeccable Lighthouse scores

Perhaps the real reason I keep using Next.js is because of how effortless it felt to get a perfect Lighthouse score. When I was still using the classic LAMP stack

for Web applications, it never felt this easy. I would be running gulp tasks to minify and mangle JS, optimize images, and figuring out clever ways to reduce my bundle sizes.